Run the following to initialize the environment and import the libraries necessary for this notebook.

%matplotlib inline

from functools import lru_cache

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from IPython import display

from ipywidgets import IntSlider, interact

from sklearn import datasets, tree

from sklearn.model_selection import (

StratifiedKFold,

cross_val_predict,

cross_val_score,

train_test_split,

)

plt.rcParams["figure.figsize"] = (8, 4)Data Preparation¶

About the dataset¶

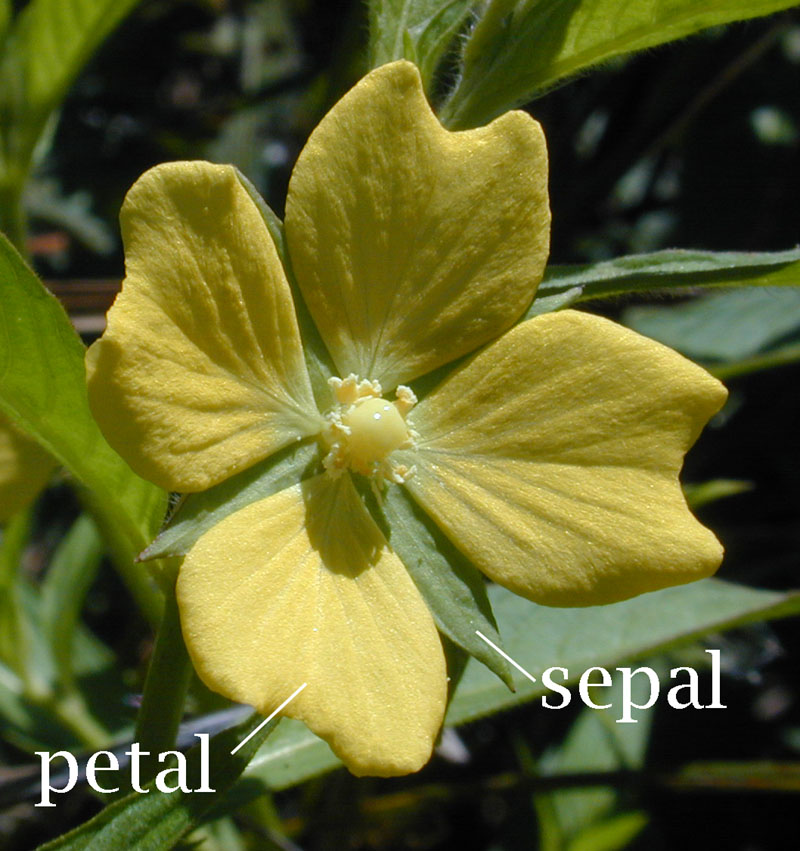

We will use a popular dataset called the iris dataset. Iris is a flower with three different species shown below.

The three iris species differ in the lengths and widths of their petals and sepals.

Figure 4:Petal and sepal of a flower

A standard data mining task is to train a model that can classify the spieces (target) automatically based on the lengths and widths of the petals and sepals (input features).

Load dataset from scikit-learn¶

How to load the iris dataset?

To load the iris dataset, we can simply import the sklearn.datasets package.

from sklearn import datasets

iris = datasets.load_iris()

type(iris) # object typesklearn stores the dataset as a Bunch object, which is essentially a bunch of properties put together.

How to learn more about a library?

Detailed documentation can be found at https://

We can use the symbol ? to obtain the docstring of an object and ?? to obtain its source code, if available.

datasets.load_iris?datasets.load_iris??How to learn more about the dataset?

The property DESCR (description) is a string that contains some background information about the dataset:

print(iris.DESCR)All the properties of an object can be listed using the built-in function dir (directory):

dir(iris)How to show the data?

The properties data and target contains the data values.

type(iris.data), type(iris.target)The data are stored as numpy array, a powerful data type optimized for performance. It provides useful properties and methods to describe and process the data:

iris.data.shape, iris.data.ndim, iris.data.dtypeiris.data is a 150-by-4 2-dimensional array of 64-bit floating-point numbers.

- 150 corresponds to the number of instances, while

- 4 corresponds to the number of input attributes.

To show the input feature names:

iris.feature_namesTo show the means and standard deviations of the input features:

iris.data.mean(axis=0), iris.data.std(axis=0)All the public properties/methods of numpy array are printed below:

import numpy as np # import numpy and rename it as np

[

attr for attr in dir(np.ndarray) if attr[0] != "_"

] # private attributes begin with underscoreWhat is the target feature?

The target variable of the iris dataset is the flower type, whose names are stored by the following property:

iris.target_namesiris.target is an array of integer indices from {0, 1, 2} for the three classes.

iris.target# YOUR CODE HERE

raise NotImplementedError()

shape, ndim, dtype# tests

assert (

isinstance(shape, tuple) and isinstance(ndim, int) and isinstance(dtype, np.dtype)

)# YOUR CODE HERE

raise NotImplementedError()

feature_min, feature_max# tests

assert feature_min.shape == (4,) == feature_max.shapeCreate pandas DataFrame¶

The package pandas provides additional tools to display and process a dataset.

First, we translate the Bunch object into a pandas DataFrame object.

import pandas as pd

# write the input features first

iris_df = pd.DataFrame(data=iris.data, columns=iris.feature_names)

# append the target values to the last column

iris_df["target"] = iris.target

iris_df # to display the DataFrameIn jupyter notebook, a DataFrame object is conveniently displayed as an HTML table. We can control how much information to show by setting the display options.

We can also display the statistics of different numerical attributes using the method describe and boxplot.

iris_df.describe()iris_df.boxplot()How to handle nominal class attribute?

Note that the boxplot also covers the target attribute, but it should not. (Why?) Let’s take a look at the current datatypes of the different attributes.

print(iris_df.dtypes)The target is regarded as a numeric attribute with type integer int64. Instead, the target should be categorical with only three possible values, one for each iris species.

To fix this, we can use the astype method to convert the data type automatically. (More details here.)

iris_df.target = iris_df.target.astype("category")

iris_df.boxplot() # target is not plotted as expected

iris_df.target.dtypeWe can also rename the target categories {0, 1, 2} to the more meaningful names of the iris species in iris.target_names.

(See the documentation.)

iris_df.target = iris_df.target.cat.rename_categories(iris.target_names)

iris_df # check that the target values are now setosa, versicolor, or virginica.# YOUR CODE HERE

raise NotImplementedError()

target_counts# tests

assert target_counts.shape == (3,)How to select specific rows and columns?

The following uses ipywidget to show the different ways of selecting/slicing the rows of a DataFrame.

from ipywidgets import interact

@interact(

command=[

"iris_df.head()",

"iris_df[0:4]",

"iris_df.iloc[0:4]",

"iris_df.loc[0:4]",

"iris_df.loc[iris_df.index.isin(range(0,4))]",

"iris_df.loc[lambda df: df.target=='setosa']",

"iris_df.tail()",

"iris_df[-1:]",

]

)

def select_rows(command):

output = eval(command)

display.display(output)The following shows the different ways of slicing the columns.

@interact(

command=[

"iris_df.target",

'iris_df["target"]',

'iris_df[["target"]]',

"iris_df[iris_df.columns[:-1]]",

"iris_df.loc[:,iris_df.columns[0]:iris_df.columns[-1]]",

'iris_df.loc[:,~iris_df.columns.isin(["target"])]',

"iris_df.iloc[:,:-1]",

]

)

def select_columns(command):

output = eval(command)

display.display(output)For instance, to compute the mean values of the input features for iris setosa:

iris_df.iloc[lambda df: (df["target"] == "setosa").to_numpy(), :-1].mean()We can also use the method groupby to obtain the mean values by flower types:

iris_df.groupby("target", observed=False).mean()# YOUR CODE HERE

raise NotImplementedError()

iris2d_df# tests

assert set(iris2d_df.columns) == {"petal length (cm)", "petal width (cm)", "target"}Alternative methods of loading a dataset¶

The following code loads the iris dataset from an ARFF file instead.

import io

import urllib.request

from scipy.io import arff

ftpstream = urllib.request.urlopen(

"https://raw.githubusercontent.com/Waikato/weka-3.8/master/wekadocs/data/iris.arff"

)

iris_arff = arff.loadarff(io.StringIO(ftpstream.read().decode("utf-8")))

iris_df2 = pd.DataFrame(iris_arff[0])

iris_df2["class"] = iris_df2["class"].astype("category")

iris_df2Pandas also provides a method to read the iris dataset directly from a CSV file locally or the internet, such as the UCI respository.

iris_df3 = pd.read_csv(

"https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data",

sep=",",

dtype={"target": "category"},

header=None,

names=iris.feature_names + ["target"],

)

iris_df3The additional arguments dtype, header, and names allow us to specify the attribute datatypes and names.

Training and Testing¶

To obtain unbiased performance estimates of a learning algorithm, the fundamental principle is to use separate datasets for training and testing.

If there is only one dataset, we should split it into training sets and test sets by random sampling to avoid bias in the performance estimate. In the following subsections, we will illustrate some methods of splitting the datasets for training and testing.

Stratified holdout method¶

This method randomly samples data for training or testing without replacement. It is implemented by the train_test_split function from the sklearn.model_selection package.

from sklearn.model_selection import train_test_split

X_train, X_test, Y_train, Y_test = train_test_split(

iris_df[iris.feature_names], # We also separated the input features

iris_df.target, # and target as X and Y for the training and test sets.

test_size=0.2, # fraction for test set

random_state=1,

stratify=iris_df.target,

) # random seed

X_train.shape, X_test.shape, Y_train.shape, Y_test.shapeThe fraction of holdout test data is:

len(Y_test) / (len(Y_test) + len(Y_train))The class proportion of the iris dataset can be plotted as follows:

iris_df.target.value_counts().plot(kind="bar", ylabel="counts")We can check the effect of stratification on the class proportions:

@interact(stratify=False, data=["Y_train", "Y_test"], seed=(0, 10))

def plot_class_proportions(stratify, data, seed=0):

Y_train, Y_test = train_test_split(

iris_df.target,

test_size=0.2,

random_state=seed,

stratify=iris_df.target if stratify else None,

)

eval(data).value_counts().sort_index().plot(kind="bar", ylabel="counts")We first apply a learning algorithm, say the decision tree induction algorithm, to train a classifier using only the training set.

from sklearn import tree

clf = tree.DecisionTreeClassifier(random_state=0) # the training is also randomized

clf.fit(X_train, Y_train) # fit the model to the training setWe can use the predict method of the trained classifier to predict the flower type from input features.

Y_pred = clf.predict(X_test)

Y_predThe following code returns the accuracy of the classifier, namely, the fraction of correct predictions on the test set.

accuracy_holdout = (Y_pred == Y_test).mean()

accuracy_holdoutThe score method performs essentially the same computation. The following code uses f-string to format the accuracy to 3 decimal places.

print(f"Accuracy: {clf.score(X_test, Y_test):0.3f}")To see input features of misclassified test instances:

X_test[Y_pred != Y_test]# YOUR CODE HERE

raise NotImplementedError()

accuracy_holdout_training_setfrom functools import lru_cache

import numpy as np

@lru_cache(None) # cache the return value to avoid repeated computation

def holdout_score(seed):

clf = tree.DecisionTreeClassifier(random_state=seed)

X_train, X_test, Y_train, Y_test = train_test_split(

iris_df[iris.feature_names], iris_df.target, test_size=0.2, random_state=seed

)

# YOUR CODE HERE

raise NotImplementedError()

@lru_cache(None)

def subsampling_score(N):

return sum(holdout_score(i) for i in range(N)) / N# tests

assert np.isclose(subsampling_score(50), 0.9466666666666663)After implementing subsampling_score, the following code should plot the mean accuracies for different N. Check that the variation becomes smaller as N increases.

import matplotlib.pyplot as plt

plt.stem([subsampling_score(i) for i in range(1, 50)])

plt.xlabel(r"$N$")

plt.ylabel(r"Mean accuracy")The documentation here describes another alternative called the bootstrap method, which samples without replacement. Unlike the original bootstrap method, the test set is also sampled with replacement instead of taken directly from the out-of-the-bag instances, which are not used for training.

Stratified cross-validation¶

Another method of evaluating a classification algorithm is to randomly partition the data into folds, which are nearly equal-sized blocks of instances. The score is the average of the accuracies obtained by using each fold to test a classifier trained using the remaining folds.

The module sklearn.model_selection provides two functions cross_val_predict and cross_val_score for this purpose.

from sklearn.model_selection import StratifiedKFold, cross_val_predict, cross_val_score

cv = StratifiedKFold(n_splits=5, random_state=0, shuffle=True)For instance, the following returns the misclassified instances by 5-fold cross-validation.

iris_df["prediction"] = pd.Categorical(

cross_val_predict(clf, iris_df[iris.feature_names], iris_df.target, cv=cv)

)

iris_df.loc[lambda df: df["target"] != df["prediction"]]clf = tree.DecisionTreeClassifier(random_state=0)

scores = cross_val_score(clf, iris_df[iris.feature_names], iris_df.target, cv=5)

print("Accuracies: ", ", ".join(f"{acc:.4f}" for acc in scores))

print(f"Mean accuracy: {scores.mean():.4f}")# YOUR CODE HERE

raise NotImplementedError()

accuracy_cv